When we released Veeam Backup & Replication version 6, one of the big “what’s new” items was our enterprise scalability through our distributed architecture and automatic intelligent load balancing. The move to the proxy/repository architecture was a big one for us because previously all backup activities ran through a single backup server. There were several reasons for moving to this architecture…

- Distribute backup processing across multiple proxy servers to make it easier for you to scale your B&R deployment

- Achieve higher availability and redundancy: if a proxy goes down, another one can still complete the task – no more single point of failure

- Reduce impact from backups on production infrastructure through intelligent load balancing

- Dramatically simplify job scheduling (by automatically controlling the desired tasks concurrency)

- Control backup storage saturation (for when the backup storage is too slow)

With automatic intelligent load balancing, Veeam Backup & Replication picks the best proxy server (best in terms of connectivity to VM data, as well as least loaded with other tasks) to perform the backup for a VM each time the job runs. With v7 we enhanced this further by adding parallel processing, meaning a VM with multiple virtual disks could be backed up by more than one proxy at the same time. In simple terms, here’s the priority order a backup server uses to determine the best proxy for a given VMware virtual disk:

- Direct SAN (direct storage access, no impact on production hosts)

- Hot add (direct storage access through ESXi I/O stack)

- Network Block Device (NBD) (VM data is retrieved over the network through the ESXi management interface)

When you set up a Veeam proxy for VMware, you are given the option to either manually choose connection modes and datastores, or let the proxy automatically detect these. You’re also given the option to set the maximum number of concurrent tasks for the proxy:

The backup server also takes into account the current load (max concurrent tasks) to make sure that the infrastructure isn’t overloaded. With v7 we also enhanced our compression algorithms to dramatically reduce CPU usage, so that each proxy could do more work. As customers upgraded to v7 and started to put it through its paces one thing started to become clear…we were too fast!

For many of our customers there was no problem with leaving the backup jobs and proxies on full automatic mode. The backup jobs were completing on time and there were no complaints. But certain customers, especially those with 24/7 workloads (the always-on business) and with too many backup proxies deployed, would experience an issue due to production storage availability being impacted by too much load from backup jobs. Applications were becoming less responsive during backups and some were even getting alarms in their monitoring systems. The issue was IOPS, not enough of them. Backup can be pretty I/O intensive and if you have some VMs doing other I/O intensive operations in parallel with backup then you can have issues.

To solve this issue we first took the “easy” route and simply set limits on the datastores –“No more than x tasks per datastore at a time” in one of the first v7 patches—but this solution was too difficult to manage…what if someone placed a new SQL server on a datastore, rendering your previously carefully planned limit too high? And if you set the limit too low and conservatively, you may not have enough time in your backup window to complete the backups. It became clear to us that manual limits just won’t cut it in highly dynamic virtual infrastructures.

So with v8, we are Introducing Backup I/O Control (patent-pending), a new capability in Veeam Backup & Replication v8. Backup I/O Control is a new, global setting that lets you set limits on how much latency is acceptable for any VMware or Hyper-V datastore. Think of it as a service-level agreement for your datastores.

The idea is pretty simple and comes in two parts. The first setting, “Stop assigning new tasks to datastore at:”, means that when the backup server is assigning a proxy for the virtual disk, it will now take latency (IOPS) into consideration. The backup job will wait for the datastore to become “not too busy” before starting the backup. The second setting, “Throttle I/O of existing tasks at:”, is for when a backup job is already running and latency becomes an issue due to an external load. For example, if a SQL maintenance process were to start running in a VM using the same datastore as the backup job, then the backup job will automatically throttle its read I/O from the corresponding datastore so that latency drops below the setting selected. The backup job might take a little bit longer in this case, but at least it won’t be affecting applications.

You can see an example of this setting in action in the graph below, where I/O control is turned on:

The green line in the graph is read latency and you can see that once Backup I/O Control is enabled, read latency drops below 20ms, and then goes out of hand again when it is disabled.

In Veeam Backup & Replication v8, Backup I/O Control is a global setting in our Enterprise Edition. For the default values, we have decided to go with slightly reduced thresholds, reflecting the Warning and Error levels that we normally see from our customers that use our monitoring solutions. These are fully customizable, however.

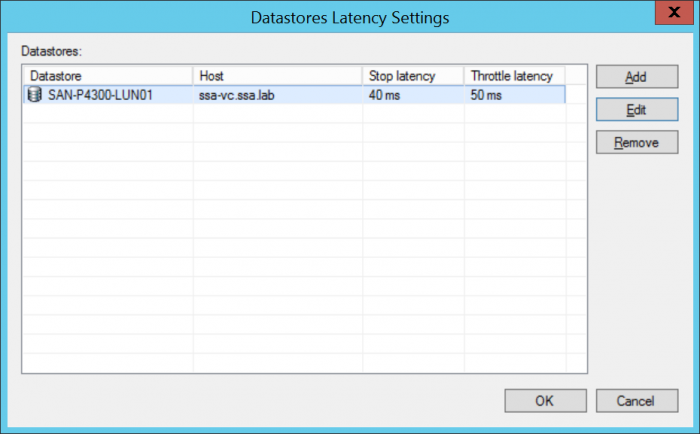

If you have our Enterprise+ Edition then you use Backup I/O Control to set the latency on a per-datastore basis, rather than a single global setting. This is especially useful if you have datastores that need a higher/lower setting based on workload or importance, for example higher latency is usually acceptable for test/dev workloads.

As always, here at Veeam we’re continuously responding to the needs of our customers, and the evolution of this feature is no exception. With the introduction of proxy servers and distributed processing in v6, and parallel processing in v7, we were able to more easily let our customers scale, and maximize backup processing along the way. Now, with the addition of Backup I/O Control in v8, we can let our customers better customize how much they maximize backup processing, allowing them the highest possible backup performance while minimizing the impact on production workloads – and better catering to the requirements of the always-on business.