Coming from a traditional infrastructure background with the nostalgic memory of a cleanly separated storage fabric, I am well aware of the challenges faced by organizations and operational teams when moving to the Hyper-Converged/Converged world.

The biggest challenge I’ve seen is a mixture of getting to grasp with some of the new technologies around this area and perhaps more challenging, getting the right resources (traditional network vs server engineers) to work together in this newly merged world. RDMA is a technology in this space which can greatly contribute to overall performance gains in various implementations.

In this blog I want to talk about my experience of RDMA over Converged Ethernet (RocEv2) within a Microsoft Storage Spaces Direct deployment and an alternative option for RDMA over Ethernet, iWARP.

What is RDMA?

Remote Direct Memory Access (RDMA) allows network data packets to be offloaded from the network card and put directly into the memory, bypassing the host’s CPU. This can provide massive performance benefits when moving data over the network and is often used in High Performance Computing or Hyper-Converged/Converged deployments of Hyper-V with Storage Spaces Direct (the focus of this blog). In a Hyper-V deployment, SMB Direct is used to create a high throughput, low latency SMB connection to remote storage using RDMA without utilizing either hosts’ CPU. With this SMB on steroids, it’s easy to see that RDMA has many potential applications for solutions which utilize SMB 3.0.

So how do you turn it on?

By default, in Windows, if your NIC is RDMA capable, the functionality will be enabled, easy!… and dangerous!

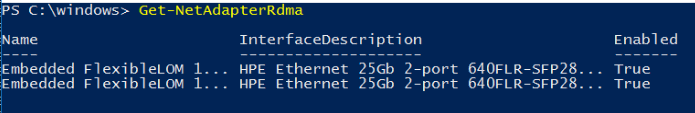

Verify the status of RDMA with PowerShell:

And it will just work?

Maybe… With the great performance benefits comes a massive potential for instability. RDMA requires a stable, almost lossless network. This blog focuses on my recent experience at a client site with RDMA over Converged Ethernet (RoCEv2), which uses the UDP protocol and is therefore sensitive to network disruption.

A bit of history

The customer had recently implemented several converged Hyper-V with Storage Spaces Direct clusters using HPE Server hardware and HPE FlexFabric switches. This turned into one of those nightmare scenarios where everything seemed fine (even in testing) until the Production load increased on the environment and stability dropped significantly, by this time, there were several live services running on the new infrastructure. Daily occurrences of cluster shared volumes going offline, hosts blue/black screening and guest VM performance issues were becoming the norm. After a lot of troubleshooting with vendor assistance on site, key configurations were found to be missing, which are key to a stable RDMA environment. Whilst not a definitive guide, I will highlight some of the findings here.

Spoiler alert!

I mentioned that there were two options for implementing RDMA over Ethernet, RoCev2 and iWARP. The experience and configuration notes in this blog are largely aimed at a RoCev2 implementation (although may apply to iWARP in some architectures). The configuration of priority flow control and associated settings are, in a nutshell, put in place to control the RDMA traffic with the aim of avoiding network disruption, which RoCE has no in-built mechanism to recover from (being UDP based). iWARP on the other hand is built on top of TCP, gaining the benefits associated with congestion-aware protocols. Long story short, if you are at the design stage of a Microsoft Storage Spaces Direct based solution, I would strongly recommend you look at purchasing NICs which support iWARP! You will save yourself a lot of time and stress!

Key Server Configuration for RDMA/RoCE/PFC/ETS

Priority Flow Control is a required configuration for RoCE deployments and must be configured on all nodes (storage/compute) and switch ports in the data path. PFC effectively tags relevant traffic (SMB 445 in our case) and allows any point in the data stream to signal when it is becoming congested, triggering a pause frame to slow down the data flow.

We can also use ETS settings within the Windows Server Data Centre Bridging role (DCBx) to allocate bandwidth reservations to traffic types.

In the configuration example below, we are setting server level configurations, configuring a RoCE capable Ethernet adapter named “Ethernet” with the relevant settings. We are creating three QoS policies for the core traffic types of an S2D cluster — SMB, Cluster traffic and everything else.

We are tagging our SMB traffic with queue three and cluster traffic with queue five. It is important you consult your switch vendor documentation or support to validate the recommended queues, as they may be different from this example. In our case, we still to this day face uncertainty regarding the recommended values for HPE FlexFabric!

In addition, we are setting bandwidth allocations of 95% for queue three (SMB) and 1% for queue five (cluster), ensuring these traffic types are given priority during times of contention.

Configuration example

# Install the DCBx feature

Install-windowsfeature data-center-bridging

# Remove any previous configuration

Remove-NetQosTrafficClass

Remove-NetQosPolicy -Confirm:$False

# Disable DCBx auto negotiation as this is not supported by Microsoft

Set-NetQosDcbxSetting -Willing 0

Get-NetAdapter "Ethernet" | Set-NetQosDcbxSetting -Willing 0

# Create QoS policies and tag each type of traffic with the relevant priority

New-NetQosPolicy "SMB" -NetDirectPortMatchCondition 445 -PriorityValue8021Action 3

New-NetQosPolicy "DEFAULT" -Default -PriorityValue8021Action 0

New-NetQosPolicy "Cluster" -Cluster -PriorityValue8021Action 5

# Enable Priority Flow Control (PFC) on a specific priority. Disable for others

Disable-NetQosFlowControl 0,1,2,4,6,7

Enable-NetQosFlowControl 3,5

# Enable QoS on the relevant interface

Enable-NetAdapterQos -InterfaceAlias "Ethernet"

#Enable-NetQosFlowControl

New-NetQosTrafficClass "SMB" -Priority 3 -BandwidthPercentage 95 -Algorithm ETS

New-NetQosTrafficClass "Cluster" -Priority 5 -BandwidthPercentage 1 -Algorithm ETS

Network configuration

Configurations of PFC and ETS vary from vendor to vendor, I’d recommend you ask your switch vendor account manager to arrange some time with an SME with experience of Hyper-Converged deployments. An example port config for enabling priority flow control with queue three and five on HPE FlexFabric is shown here:

priority-flow-control enable priority-flow-control no-drop dot1p 3,5 stp edged-port qos trust dot1p qos wrr be group sp qos wrr af1 group 1 byte-count 95 qos wrr af2 group 1 byte-count 1

Verification and monitoring

A simple verification that your nodes are using RDMA is to perform a file copy from one node to the other and then run the Get-SmbMultiChannelConnection PowerShell command, ensure all paths are showing as RDMA capable:

In addition, when performing the file copy open taskmgr, note that the CPU and Ethernet utilization should remain unaffected, this is because the network traffic is being offloaded directly from NIC to Memory, bypassing the OS!

There are also many relevant performance counters around this topic, but I’ll highlight a couple of key ones. Available on all Windows Server (2012+) will be the RDMA Activity counters. Monitor these counters during your file copy test to ensure RDMA traffic is shown on the expected NICs, paying attention to the RDMA Failed Connection attempts/errors, which may indicate a transport issue.

Your NIC driver should also come with additional counters for PFC/QoS. If they don’t, look to upgrade the driver or install these features where possible. Adding counters for your relevant QoS queues allows you to verify that traffic is being sent from one node and received on the other on the correct queue. A key metric to aid troubleshooting is the Sent/Rcv paused frames and duration. Some pause frames are expected, especially during node maintenance in an S2D cluster (due to the repair/rebalance process causing high traffic) but if you are seeing these values rise rapidly all the time, there may be a configuration or bandwidth constraint issue.

A useful event log on your nodes, which can indicate RDMA connectivity issues is the Microsoft-Windows-SMBClient/Connectivity log. The error below is a clear indication of a connectivity issue between RDMA enabled nodes:

Conclusion

I hope the information above gives an insight into the minimal configuration required for an RDMA over Converged Ethernet deployment. My honest opinion, having recently been involved in a deployment of multiple clusters, 80+ nodes in total… use iWARP rather than RoCe!

In a Hyper/Converged environment with lower bandwidth NICs (10 Gb), some of the settings above may still be required to avoid iWARP traffic being dropped at times of contention, but in most cases, the in-built mechanisms of TCP will be enough and the network config (and room for error) is minimal in comparison.

There are performance reasons why you might want to use RoCE, in theory it provides lower latency by using UDP, which carries less of a processing overhead. I’m told RoCe is used in the underlying Azure infrastructure, but they have massive resources in terms of network skills and resources to manage the potential adverse effects of a mis-configured RoCE environment. There’s a lot to be said for keeping it simple!