Veeam Backup & Replication 9.5 Update 4 was a major release that brought many improvements and interesting features. With tape not only being far from dead but rising in popularity, it’s not a surprise that tape support was a major focus item in the new version. Over the last few months, Veeam technical support has gathered enough cases to see what are the main issues that customers encounter, and with the recent release of Update 4b, it’s time that we go over the new tape functionality and show our readers the practical side of incorporating tape into their backup strategies.

Library parallel processing

Several versions ago, Veeam Backup & Replication’s media pool became “global.” That means that a single media pool can contain tapes that are associated with multiple tape devices. That already gave users several advantages. First, it considerably simplified tape management — now tape could “travel” seamlessly between the libraries. All users had to do is to rescan the library where the tapes were moved to, or if a barcode reader is not present (for example, in the case of a standalone drive), run an inventory (forcing Veeam Backup & Replication to read the tape header). Second, global media pools introduced tape “High Availability.” Users could specify among three events (“Library is offline,” “No media available,” and “All tape drives are busy”) when a tape job would fail over to the next tape device in the list.

By the way, this is also where many users get stuck when trying to delete an old device. They would get an error stating that the tape device is still in use by media pool X. If on the Tapes step you don’t see any tapes associated with the tape device, be sure to click on the Manage button (see the screenshot below); most likely that’s where the old tape device is hiding.

Update 4 went one step further and introduced true parallel processing between tape libraries. Now, if you go to media pool settings – Tapes – Manage you will be able to select a mode in which your tape devices should operate:

To enable parallel processing, set the role to “Active,” giving devices a “Passive” role enables a failover mechanism. Keep in mind it’s not possible to set only some of devices to active and others to passive — you can only have one active device with all other devices being passive, or all devices can be active. The general recommendation is to use active mode if you have several similar tape devices. If you have a modern and an old library, you can give the old library a passive role so that your jobs won’t stop, even if there are issues with the main (active) library.

As you can see, the setup is straightforward. However, I would like to take a moment to review how tape parallel processing works in Veeam Backup & Replication.

- To enable parallel processing within a single backup to tape job, this job must use either several backup jobs as sources, or the source backup job must be per-VM. If the source is a single job with multiple VMs that is creating normal backup files, the load cannot be split between the drives. Make sure your sources are set up correctly!

- Tape parallel processing is enabled at the media pool level. In media pool settings on the Options step, you can specify how many drives the job uses. If you use several tape devices in active mode, this number is the total number across all tape devices connected to this media pool. If parallel processing within a single job is allowed, see the previous point for requirements.

- Wondering how media sets are created with parallel processing? The rule is actually very simple: each drive keeps its own media set. However, this may create challenges in some setups. Consider this example: a customer opened a support ticket because Veeam Backup & Replication was using an excessive amount of tapes, but the customer could see that many of the tapes are almost empty. After investigation, it was found out that customer had a media pool that was set to create a separate media set for every session. Initially that worked well because the amount of data for each session fit a set number of tapes almost perfectly. Then another drive was added, and the customer started using parallel processing. Now the data was spread across two sets of tapes. Since the media set was finalized for every session, Veeam Backup & Replication could not continue writing to half-empty tapes on the next session, so the customer saw an unacceptable increase in tape usage. The customer had to choose between managing source data so that it 1) fits better on two sets of tape (which is a rather unreliable solution), setting the media set to be continuous, or 2) disabling parallel processing.

Two or more parallel media sets created in parallel can cause some incoherent naming. Customers sometimes complain when they see that several tapes have an identical sequence number and were written at the same time. This is actually normal and does not cause any issues. To differentiate the tapes better, we recommend adding the %ID% variable to the media set naming.

Tape GFS improvements

Before diving into tape GFS improvements, I would like to take a moment to review the default tape GFS workflow. On the day when the GFS run is scheduled, the job starts at 00:00 and starts waiting for a new point from the source job. If this new point is incremental, tape GFS will generate a virtual synthetic full on tape. If the point is a full backup, it will be copied as is. The tape GFS job will remain waiting for up to 24 hours, and if by that time a new point will not arrive, tape GFS will fail over to the previous point available. This creates a situation with backup copy jobs – because of BCJ’s continuous nature, the latest point remains locked for the duration of the copy interval. Depending on the BCJ’s settings, tape GFS may have to wait for a full day before failing over to the previous point. If it happens that the GFS job has to create points for several intervals on the same day (for example, weekly and monthly), tape GFS will not copy two separate points, instead a single point will be used as weekly and monthly. Finally, each interval keeps its own media set. For example, if a tape was used for a weekly tape, it will not be used to store a monthly point, unless erased or marked as free. This baffles a lot of users – they see an expired tape in the GFS media pool, however, the GFS tape job seems to ignore it, asking for a valid tape. This whole default workflow could be tweaked using registry values, which were used by support to great effect.

So, what improvements does Update 4 have to offer? First, two registry value tweaks have now been added to the GUI:

- You can now select the exact time when the tape GFS job should start (overriding the default 00:00 time) on the Schedule step.

- Now you can tell the tape GFS job to fail over immediately to the previous point (instead of waiting for up to 24 hours), if today’s point is not available. The option is found under Options – Advanced – Advanced tab:

- A new registry value has also been added, which allows the tape GFS job to use expired tapes from any media set, and not only the one that belongs to a particular interval. Please contact support if you think you need this.

GFS parallel processing

If you look at the previous version’s user guide page for GFS media pool, you will be greeted with a box saying that parallel processing is not supported for tape GFS. This is no longer true – Update 4 has added parallel processing for tape GFS jobs as well. The workflow is the same as for normal media pools, which was described in the first section. Be wary of possible elevated tape usage when using GFS and parallel processing as such a setup requires many media sets to be created. As a rule of thumb for the minimum amount of tapes, you can use this formula:

A (media sets configured) x B (drives) = C (tapes used)

For example, a setup with all 5 media sets enabled and 2 drives, will require a minimum of 10 tapes.

Daily media set

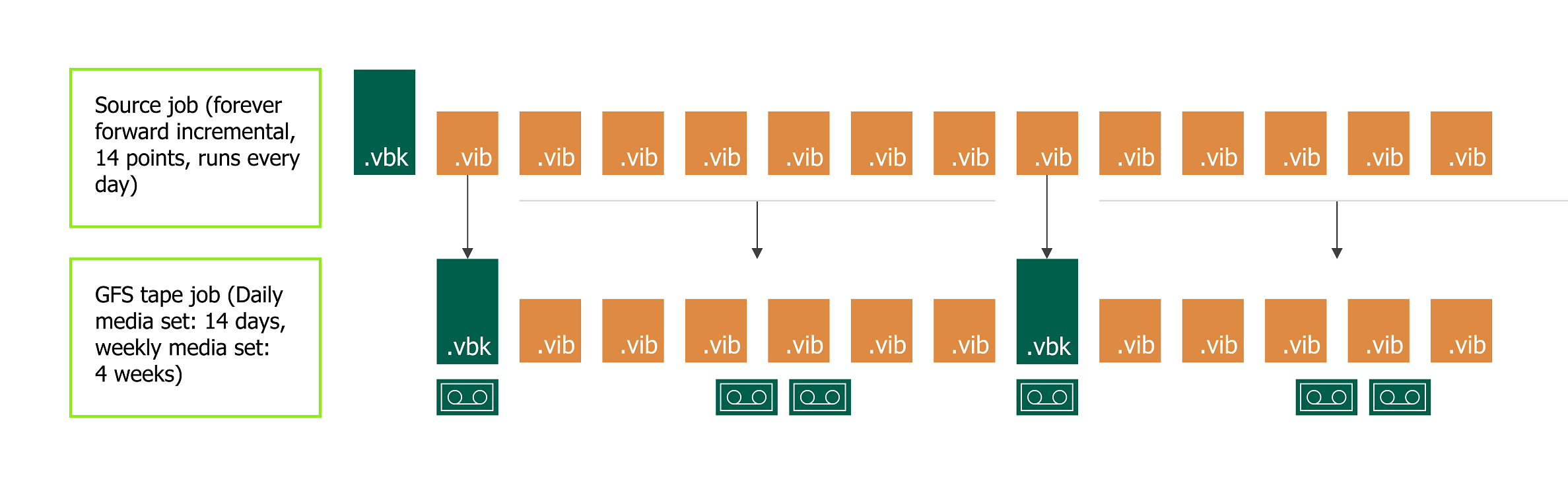

A major addition to tape GFS functionality is “daily media set”. This allows users to combine GFS and normal tape jobs in to a single entity. If you’re using GFS media pools for long-term archiving, but also use normal media pools to keep a short incremental chain on tapes, consider simplifying management by using daily media set instead of a separate job. You can enable this in GFS media pool settings:

Daily media set requires weekly media set to be enabled as well. With daily media set, GFS job will check every day if the source job created any full or incremental points and will put them on tape. If source job creates multiple points, they all will be copied on tape. This helps fill the gap between weekly GFS points and restore from an incremental point. During the restore, Veeam Backup & Replication will ask for tapes containing the closest full backup (most likely it will be a weekly GFS) and the linked increments. Mind that, just as other backup to tape jobs, daily media set does not copy rollbacks from reverse incremental jobs. Only a full backup, which is always the most recent point in reverse incremental jobs, will be copied.

To give you a practical example, imagine the following scenario:

You have a forever forward incremental job that runs every day at 5:00 AM and is set to keep 14 restore points.

For tape, you can configure a GFS job with the daily media pool set to keep 14 days, weekly of 4 weeks and the desired number of months, quarters and years. The daily media set can be configured to append data and not to export the tape. Weekly and older GFS intervals do not append data and export the tapes after each session. That way tapes containing incremental points are kept in the library and rotated according to the retention. Meanwhile tapes containing full points are brought offline and can be stowed in a safe vault.

Should you need to restore, Veeam Backup & Replication will issue a request to bring online a tape containing the weekly GFS point, and will use the tape from the daily media set to restore the linked increments.

WORM tapes support

WORM stands for Write Once Read Many. Once written, this kind of tape cannot be overwritten or erased, making it invaluable in certain scenarios. Due to technical difficulties, previous versions of Veeam Backup & Replication did not have support for WORM tapes.

Working with WORM tapes is not that different from normal tapes. The first step is to create a special WORM media pool. As usual, there are two types: normal and GFS. There is only one special thing about a WORM media pool — the retention policy is grayed out. Next, you need to add some tapes. This is probably where the majority of issues arise when WORM tapes are recognized as normal tapes, and vice versa. Remember that everything WORM-related has a blue-colored icon. Veeam Backup & Replication defines WORM tape by the barcode, or during inventory. Be sure to consult the documentation for your tape device and use correct barcodes! For example, IBM libraries consider letters from V to Y in the barcode as an indication for a WORM tape. Using them incorrectly will create confusion in Veeam Backup & Replication.

NDMP backup

In Update 4, it is possible to back up and restore volumes from NAS devices if they support the NDMP protocol. The first step here (which sometimes gets overlooked) is adding an NDMP server. This is done from Inventory view, our user’s guide provides the details.

NOTE: Mind the requirements and limitations, as the current version has several important things to keep in mind. For example,

NetApp Cluster Aware Backup (or CAB) extensions are currently not supported for NDMP backup in Update 4b. The workaround is to configure node-scoped NDMP.

After the NDMP server is added to the infrastructure, it can be set as a source to a normal file to tape job. The restore, as with other files on tapes, is initiated from Files view. Keep in mind that for NDMP, currently Veeam Backup & Replication does not allow for restores of individual files on volumes. Only the whole volume can be restored to the original or to a new location.

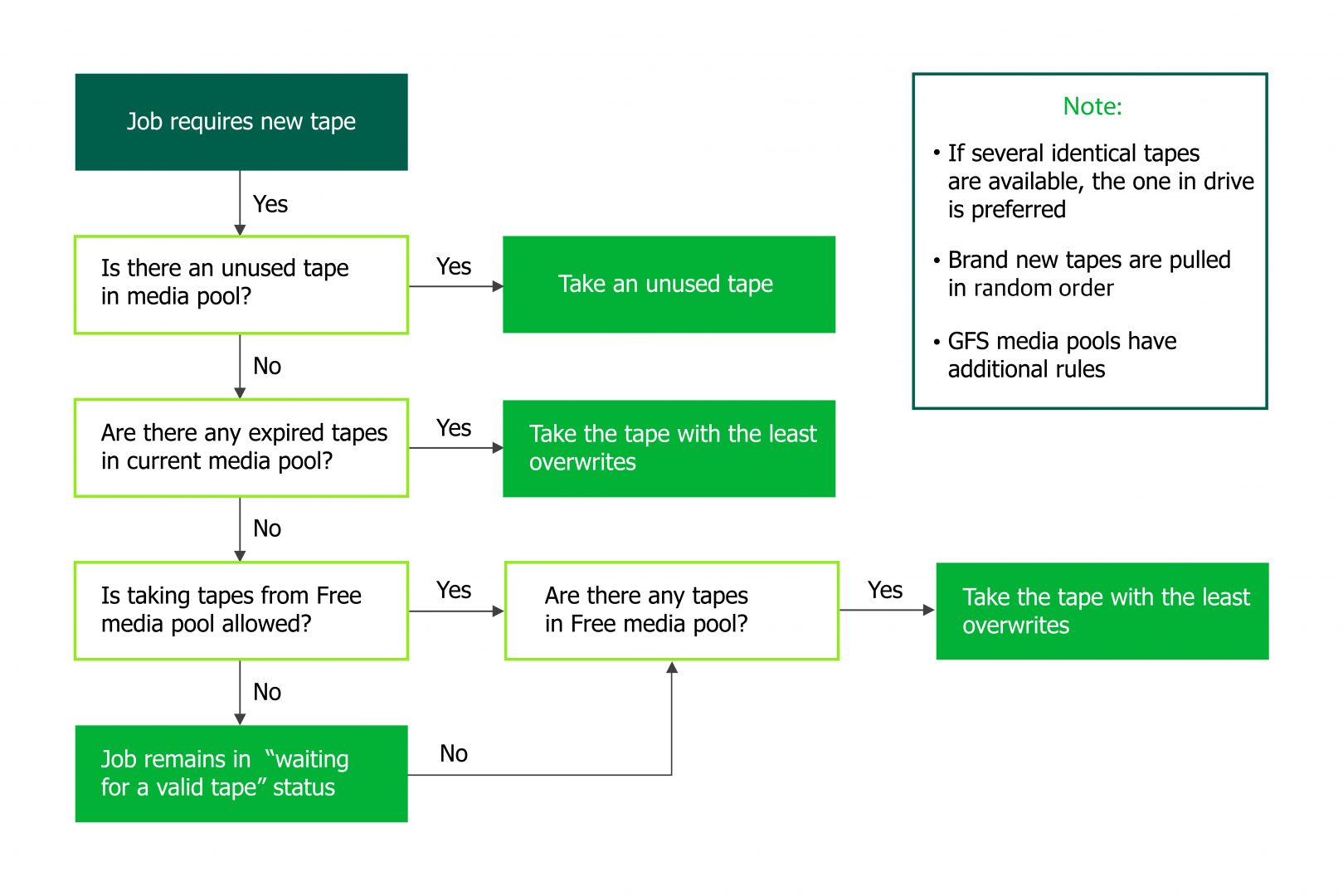

Tape selection mechanism

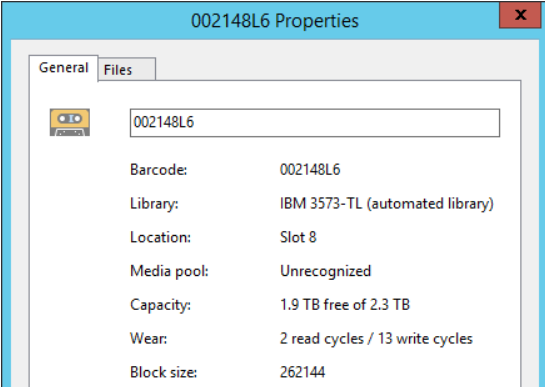

Update 4 added a new metric for used tapes: number of read/write sessions. This metric is seen in tape properties, under Wear field:

Once tape is reused the maximum number of times (according to the tape specs), it will be moved to the Retired media pool. However, this is not new. Previous versions of Veeam Backup & Replication also tracked warnings from tape devices when tape should no longer be used. What is new is that Veeam Backup & Replication Update 4 tries to balance the usage and, among other things, will select a tape that was used the least amount of times. Here is a neat little scheme for you to remember the process:

File from tape restore improvements

The logic for restores of folders from tapes saw some improvements in Update 4. Now when a user selects a specific backup set from which to restore, only the files that were present in the folder during that backup session are restored. The menu itself did not change, only the logic behind it. As before, in Update 4 you need to start the restore wizard (as always from the Files view) and click on the Backup Set button to choose the state to which to restore.

Tenant to tape

This feature allows service providers to offer a new type of service — writing clients’ backup data to tape. All configuration is done on the provider side — if Veeam Backup & Replication has a cloud provider license installed, the new job wizard will allow the addition of a Tenant as a source for the job. Such jobs can only work with GFS pools. Restore is also done on the provider side — the options are to 1) restore files to original cloud repository (existing files will be overwritten, and the job will be mapped to the restored backup set) 2) to a new cloud repository or 3) to a local disk. In the end, if the customer has their own tape infrastructure, it’s also possible to simply mail the tape and avoid any network traffic.

Other improvements

Tape operator role. A new user role is now available. Users with this role can perform any service actions with tapes, but not initiate a restore.

Source processing order. You can now change the ordering of how sources should be processed, just like VMs for backup jobs.

Include/exclude masks for file to tape jobs. In previous versions, file to tape jobs could only use inclusion masks. Update 4 adds exclusions masks as well. This works only for files and folders, NDMP backups are still done at the volume level.

Auto eject for standalone drive when tape is full. This is a small tweak in the tape logic when working with a standalone tape drive. If a tape gets filled up during a backup session, it will be ejected from the drive, so that a person near the drive could insert another tape. This is also useful in protecting against ransomware as the tape is ejected and offline.

Conclusion

The amount of new tape features this update brought us clearly shows that tape remains a focus of product management. I encourage you to explore these new capabilities and to consider how they can make your backup practice even better.