How to Clean Up Veeam Kasten for Kubernetes Orphaned Volumes on GKE k8s 1.28.x

Purpose

This guide provides instructions on how to clean up Veeam Kasten for Kubernetes provisioned PVs that are not deleted, causing orphaned volumes in the GKE k8s 1.28 release.

It has been observed on GKE running k8s 1.28, Veeam Kasten for Kubernetes provisioned PVs using in-tree provisioner cannot be deleted via “kubectl delete pvc <pvcname>”, resulting in volume sprawl requiring manual remediation. Restoring from snapshots/backups still functions.

There is no risk of data loss or inability to recover from existing backups.

This issue effects only in-tree provisioner “kubernetes.io/gce-pd”, on GKE k8s 1.28.x.

Solution

Identification Of Issue

This section demonstrates how to check for orphaned volumes in the environment and successfully clean them.

Environment

-

GKE k8s 1.28.x

-

Kasten K10 Version : 6.5.2

-

in-tree provisioned “kubernetes.io/gce-pd”

Step 1: Protect workloads using Veeam Kasten for Kubernetes. Verify any Orphaned PVs

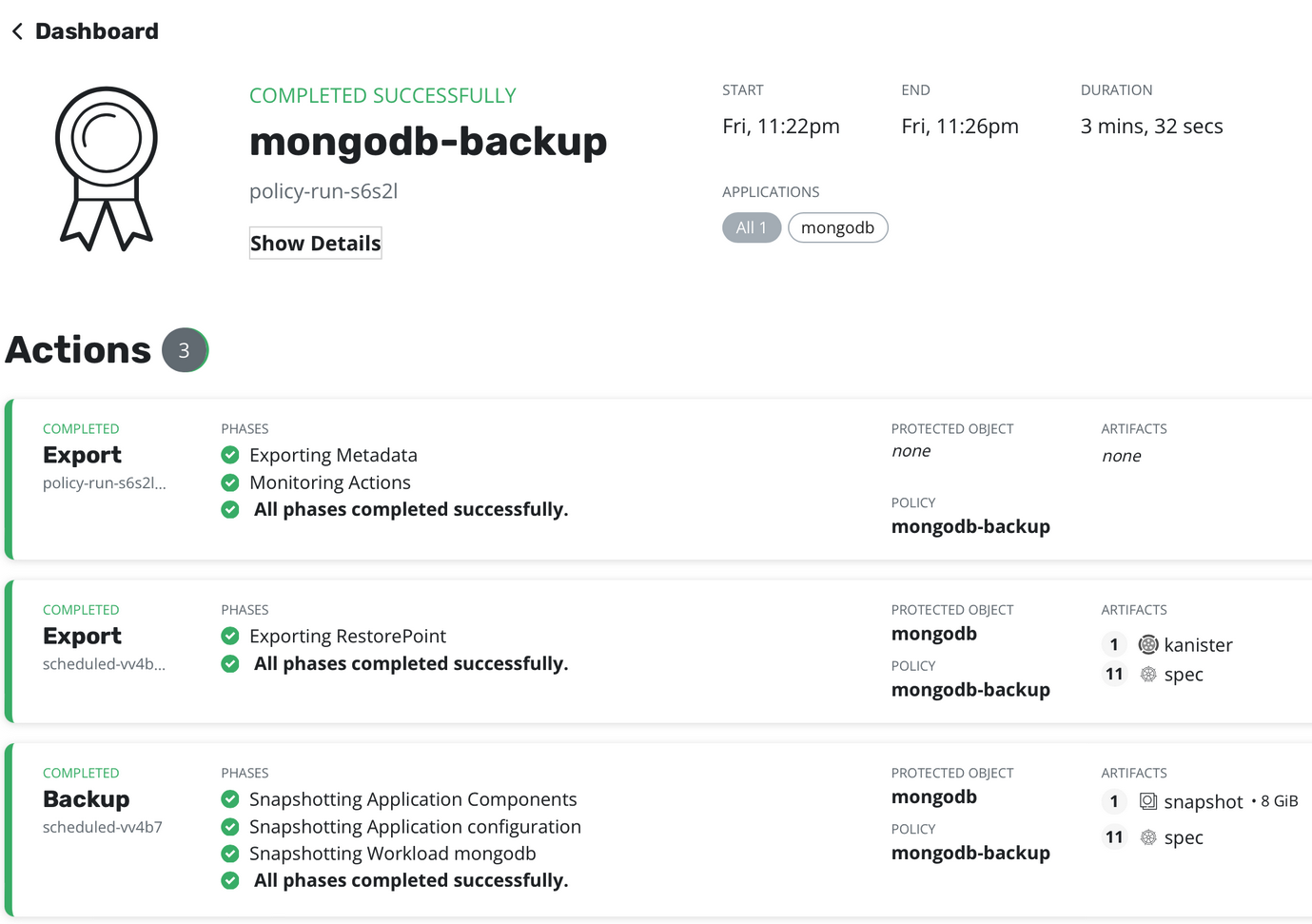

- Run the Policy to take a Snap+Export and capture the result.

The following screenshot demonstrates a successful snapshot and export.

status: message: 'error getting deleter volume plugin for volume "kio-4e1c8777bcd411eeb71cde3cda5267f6-0": no volume plugin matched' phase: Failed

- Check for failed PV to trace orphaned volume:

example output:

kubectl get pv |grep -i failed kio-4e1c8777bcd411eeb71cde3cda5267f6-0 8589934592 RWO Delete Failed kasten-io/kio-4e1c8777bcd411eeb71cde3cda5267f6-0 standard 39m kio-e747f57abcd111eeb71cde3cda5267f6-0 8589934592 RWO Delete Failed kasten-io/kio-e747f57abcd111eeb71cde3cda5267f6-0 standard 57m kubectl describe pv kio-4e1c8777bcd411eeb71cde3cda5267f6-0 Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning VolumeFailedDelete 15m persistentvolume-controller error getting deleter volume plugin for volume "kio-4e1c8777bcd411eeb71cde3cda5267f6-0": no volume plugin matched

Step 2: Attempt to Delete the Orphaned PVC

Using the following command attempt to delete the identified orphaned PVC.

Example

kubectl delete pvc kio-4e1c8777bcd411eeb71cde3cda5267f6-0 - n kasten-io status: message: 'error getting deleter volume plugin for volume "kio-4e1c8777bcd411eeb71cde3cda5267f6-0": no volume plugin matched' phase: Failed

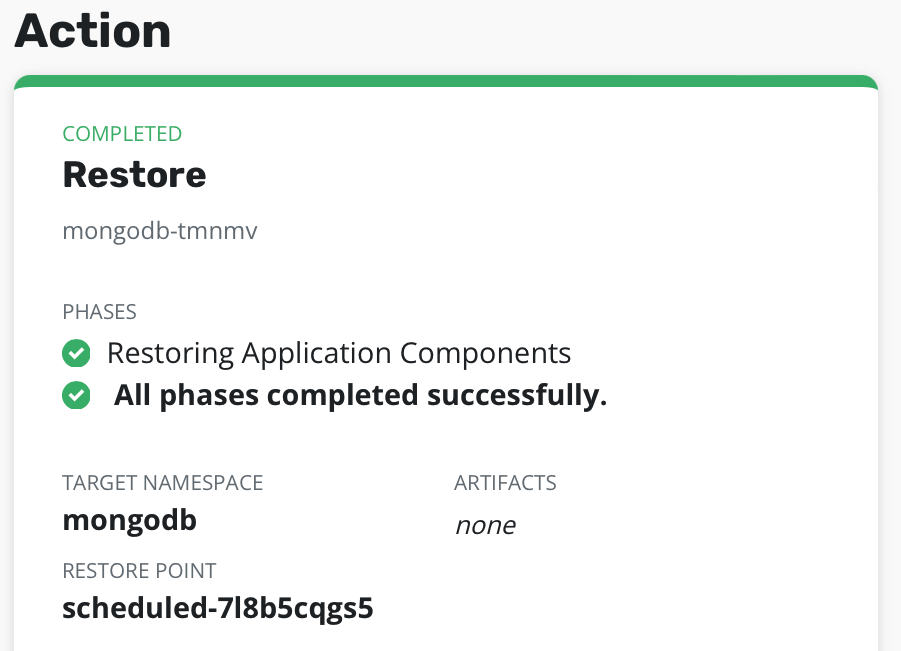

Step 3: Restore Workload

Restore workload from Veeam Kasten for Kubernetes snapshot/export, which succeeds!

Orphaned Volume Clean-Up Procedure

The following steps outline the process to clean up any orphaned volumes.

-

Identify GCE Disk names for orphaned volumes.

-

Clean up orphaned disks from GCE.

-

Clean up failed Veeam Kasten for Kubernetes PV Resources in k8s cluster.

If this KB article did not resolve your issue or you need further assistance with Veeam software, please create a Veeam Support Case.

To submit feedback regarding this article, please click this link: Send Article Feedback

To report a typo on this page, highlight the typo with your mouse and press CTRL + Enter.

Spelling error in text

KB Feedback/Suggestion

This form is only for KB Feedback/Suggestions, if you need help with the software open a support case